Companies using Google BERT

We have data on 65 companies that use Google BERT. The companies using Google BERT are most often found in United States and in the Higher Education industry. Google BERT is most often used by companies with >10000 employees and >1000M dollars in revenue. Our data for Google BERT usage goes back as far as 8 months.

Who uses Google BERT?

| Company | Wipro Ltd |

| Website | wipro.com |

| Country | India |

| Revenue | >1000M |

| Company Size | >10000 |

| Company | Ernst & Young Global Limited |

| Website | ey.com |

| Country | United States |

| Revenue | >1000M |

| Company Size | >10000 |

| Company | Tata Consultancy Services Ltd |

| Website | tcs.com |

| Country | India |

| Revenue | >1000M |

| Company Size | >10000 |

| Company | Toptal |

| Website | toptal.com |

| Country | United States |

| Revenue | 200M-1000M |

| Company Size | 1000-5000 |

| Company | HomeStars |

| Website | homestars.com |

| Country | Canada |

| Revenue | 50M-100M |

| Company Size | 200-500 |

| Company | Website | Country | Revenue | Company Size |

|---|---|---|---|---|

| Wipro Ltd | wipro.com | India | >1000M | >10000 |

| Ernst & Young Global Limited | ey.com | United States | >1000M | >10000 |

| Tata Consultancy Services Ltd | tcs.com | India | >1000M | >10000 |

| Toptal | toptal.com | United States | 200M-1000M | 1000-5000 |

| HomeStars | homestars.com | Canada | 50M-100M | 200-500 |

Target Google BERT customers to accomplish your sales and marketing goals.

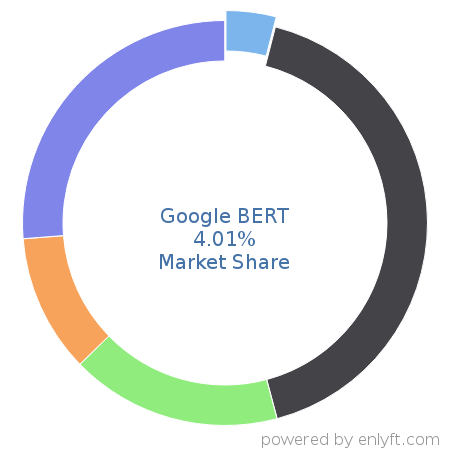

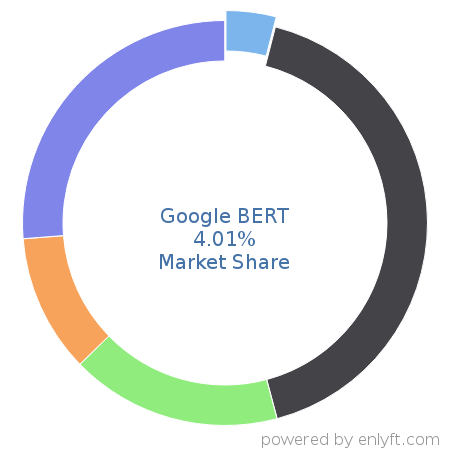

Google BERT Market Share and Competitors in Language Models

We use the best indexing techniques combined with advanced data science to monitor the market share of over 15,000 technology products, including Language Models. By scanning billions of public documents, we are able to collect deep insights on every company, with over 100 data fields per company at an average. In the Language Models category, Google BERT has a market share of about 4.0%. Other major and competing products in this category include:

Language Models

What is Google BERT?

Google BERT (Bidirectional Encoder Representations from Transformers) is a deep learning language model based on the transformer architecture. It is used for NLP (Natural Language Processing) pre-training and fine-tuning, increases search engine's understanding of human language. It is an "encoder-only" transformer architecture that consists of three modules i.e., embedding, encoders and un-embedding. It is pre-trained using unlabelled data on language modelling tasks. BERT models can therefore consider the full context of a word by looking at the words that come before and after it - particularly useful for understanding the intent behind search queries.

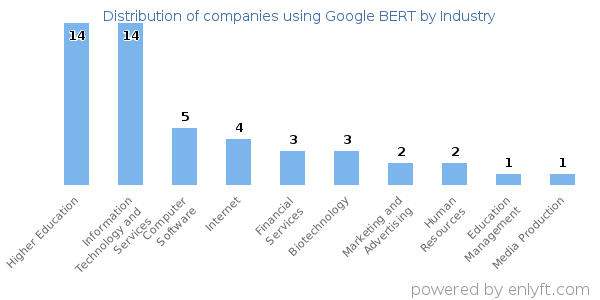

Top Industries that use Google BERT

Looking at Google BERT customers by industry, we find that Higher Education (20%), Information Technology and Services (20%), Computer Software (7%) and Internet (5%) are the largest segments.

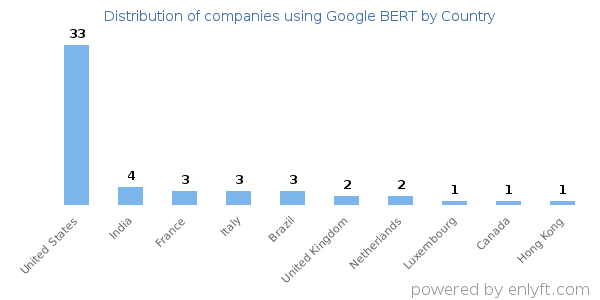

Top Countries that use Google BERT

50% of Google BERT customers are in United States and 5% are in India.

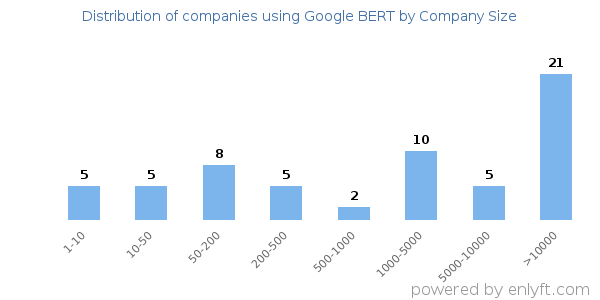

Distribution of companies that use Google BERT based on company size (Employees)

Of all the customers that are using Google BERT, a majority (54%) are large (>1000 employees), 14% are small (<50 employees) and 21% are medium-sized.

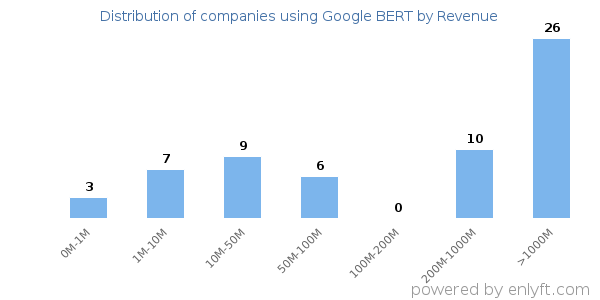

Distribution of companies that use Google BERT based on company size (Revenue)

Of all the customers that are using Google BERT, a majority (54%) are large (>$1000M), 23% are small (<$50M) and 9% are medium-sized.